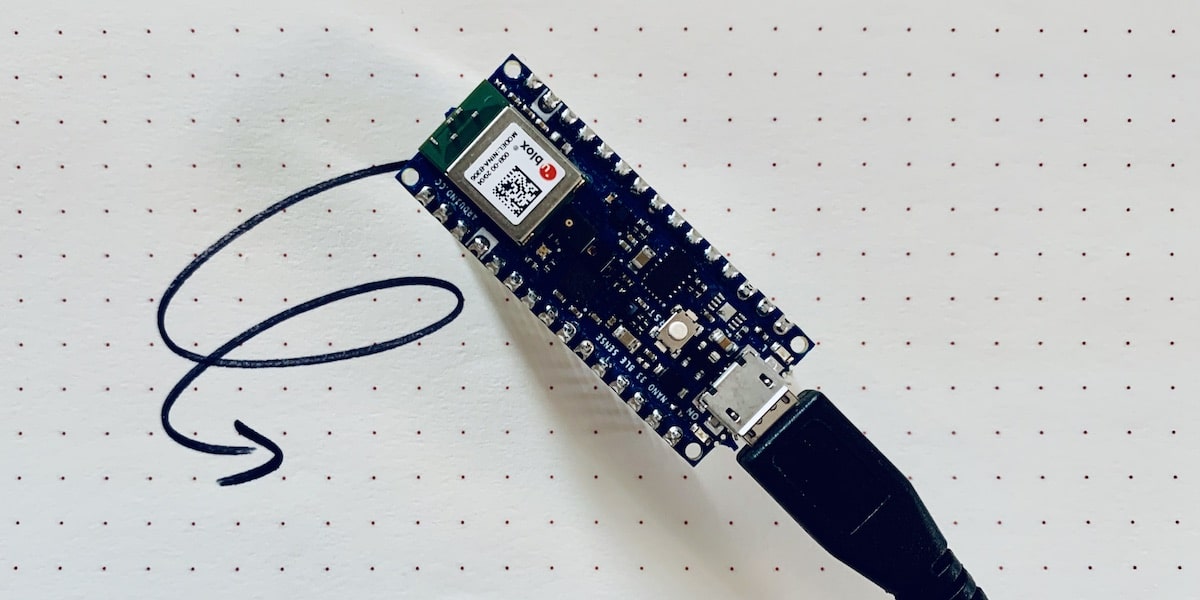

In this project, you will develop an AI model that can recognize complex gestures like circular movements and waving. You will then load it onto your Arduino Nano 33 BLE Sense and display the recognized movements in the terminal of your PC or Mac.

With this setup, you can later build more sophisticated projects, for example to turn lights on and off using gestures.

With some preparation, you can connect it to the Edge Impulse service and use its accelerometer to recognize much more sophisticated gestures and patterns.

First, read up on how to connect your Arduino to Edge Impulse.

Collecting Motion Data

At the beginning of every artificial intelligence are data. This means that you first need to collect data on the movements you want to distinguish later.

In this tutorial, you’ll learn how to collect and store motion data with the Arduino Nano 33 BLE Sense. We build on this tutorial here.

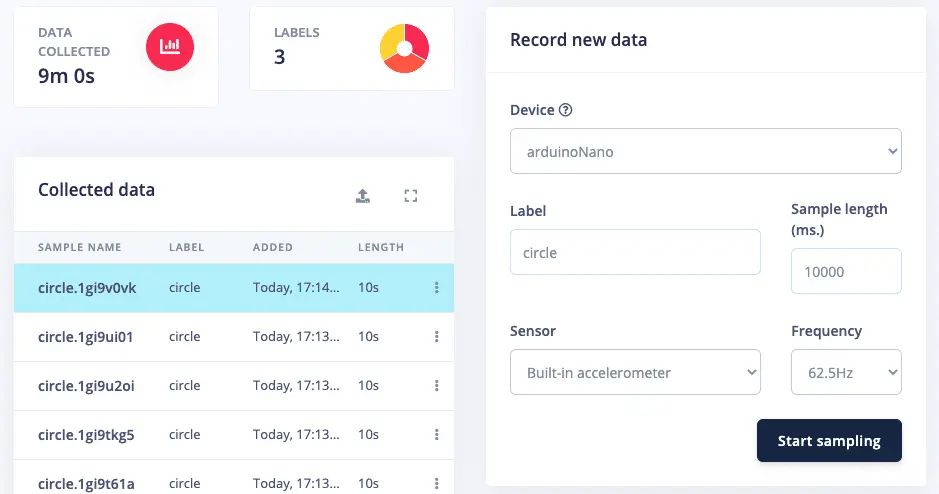

In this project, you’ll use this knowledge to teach your Arduino to recognize whether you’re performing a circular motion, waving in the air, or doing nothing. Open the Data acquisition menu item in Edge Impulse and collect samples for the two movements and the idle state – about 3 minutes each.

Make sure that your movements within a gesture don’t differ too much. However, slight variance is normal and even good. When you’re done, your screen should look something like this:

Next, we’ll move on to training the AI model.

Developing an Impulse

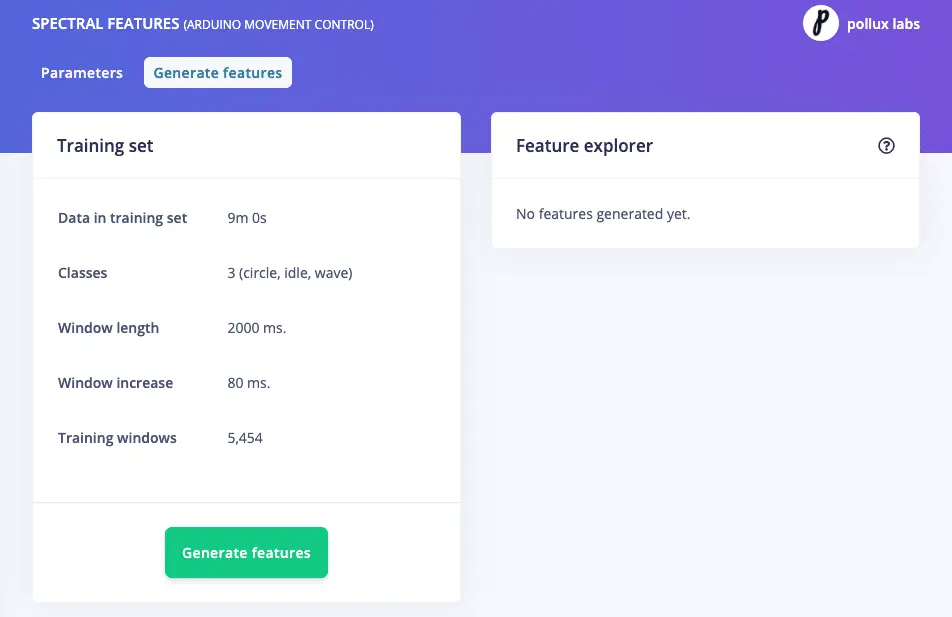

Now we move on to the next menu item: Impulse design. Here you develop so-called features from your raw data, which will later be used to analyze and classify your movements.

On the left you see the Time series data card – you don’t need to set anything here yet. Instead, click on Add a processing block to the right of it and then select Spectral Analysis. This block is particularly suitable for motion data.

One card further, select Neural Network (Keras). Finally, click on Save Impulse.

A green dot should now appear next to the Create impulse menu item. Now click on Spectral features just below it and then on Generate features at the top.

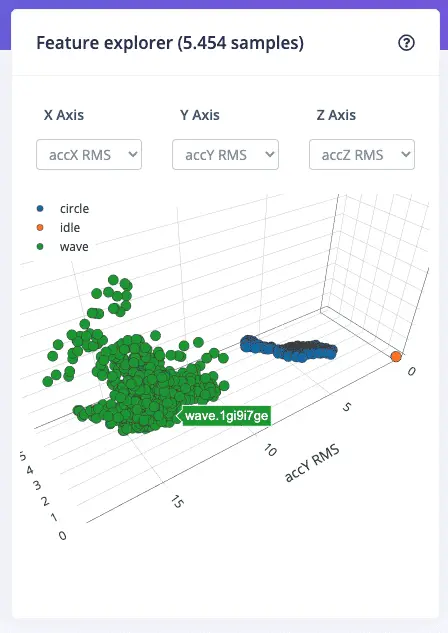

Here there’s nothing more to do than click on the Generate features button. This procedure will now run through and should only take a few seconds. Once it’s complete, you’ll see a visualization of the features and the clusters they form on the right.

___STEADY_PAYWALL___

As you can see above, the differently colored points form their own clusters that are distant from each other. This should also be the case with your motion data. The better you can distinguish the clusters from each other, the better your AI model can do it later.

If you only see a bunch of colorful points, go back and collect more or new motion data.

Training the Neural Network

Edge Impulse now has clearly defined data and classes that are easy to distinguish. All that’s missing is a neural network that can assign new data to one of these classes.

In short, a neural network is nothing more than algorithms that try to recognize patterns. You can learn more about this topic on Wikipedia.

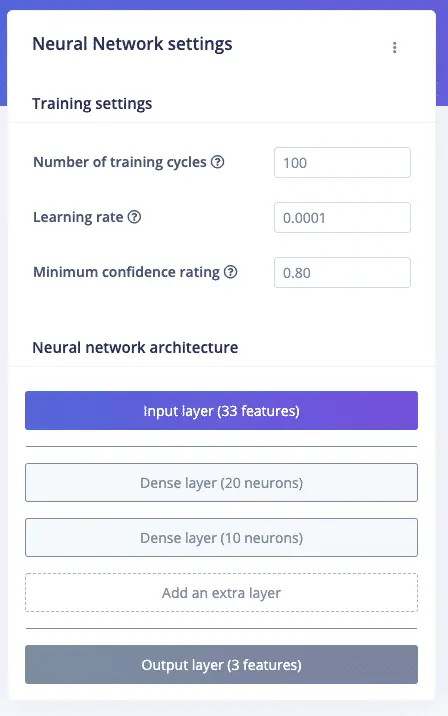

Now click on NN Classifier in the menu.

Leave the settings as they are for now and click on the Start training button. The subsequent training of the network should take a few minutes.

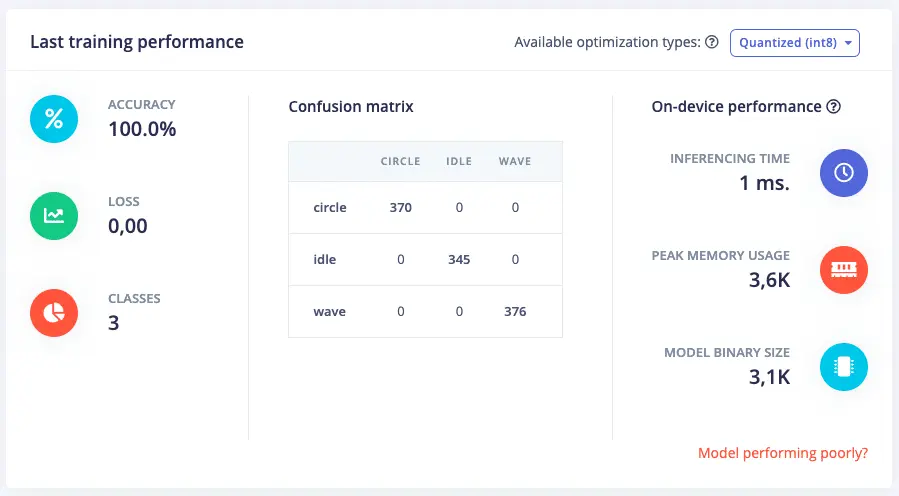

Afterwards, a new card with the results appears. At the top left, under Accuracy, you can see how accurate your neural network is working. In our case, an accuracy of 100% came out, which gives hope that the movements and gestures will be correctly recognized.

If you got a lower accuracy, you can find tips on how to develop a better AI model behind the link Model performing poorly? in the bottom right.

A First Test of the Artificial Intelligence

Now it gets exciting: Does your AI correctly recognize the movements you perform with your Arduino Nano 33 BLE Sense?

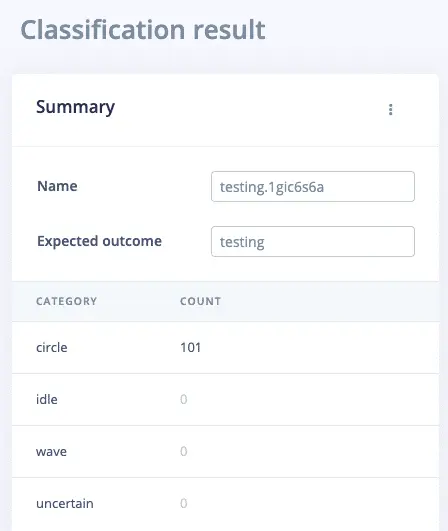

Click on the Live classification menu item. Here you can start a first test by clicking on the Start sampling button and performing one of the trained movements.

After you’ve recorded the sample, the result appears. We performed a circular movement, which the artificial intelligence correctly assigned to the circle class.

If this didn’t work for you, it’s best to go back a few steps and try to collect better motion data or train your neural network better.

But if you’re satisfied, it’s time to bring the AI to your Arduino.

Running the AI on the Arduino

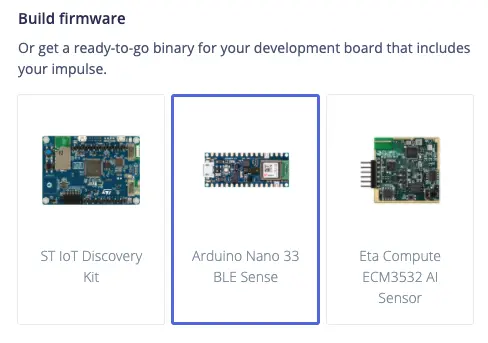

For this, click on the Deployment menu item and select the Arduino Nano 33 BLE Sense under the Build firmware heading.

You can also have an Arduino library created that you can include in a sketch. However, we’ll stick with the simpler variant here.

Further down, you also have the option to make optimizations to your AI model, but we’ll leave these aside for now as well.

Next, click on the Build button. Now the firmware with your AI model will be created, which can take a few minutes again.

Next, you’ll receive a zip file with the firmware. Run one of the following files, depending on which operating system you’re using:

- flash_windows.bat

- flash-mac.command

- flash_linux.sh

Make sure to close the terminal where the data sampling is running and end this process beforehand.

After installing the firmware, open a new terminal window and run this command:

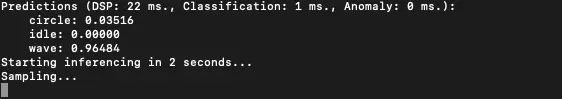

edge-impulse-run-impulseNow your AI is running in this terminal and checking every two seconds whether it detects a movement of your Arduino and if so, which one. Here it recognized a wave with a probability of 96% – which was correct:

What’s next?

You now have a functioning artificial intelligence on your Arduino that can recognize complex movements and gestures.

Take it a step further and integrate it into a new project that you can control with gestures. Your Arduino Nano 33 BLE Sense also has a microphone that you can use in Edge Impulse. Collect sounds and develop an AI that can distinguish between them. Your imagination is the only limit! 🙂